From Monolith to Distributed Systems in Rust: A Practical Introduction

A hands-on journey through small, working projects to understand when and why architecture needs to evolve

TL;DR

- For beginners, tinkerers, hobbyists, amateurs, and early-career developers…

- Start with a monolith. Seriously. A single binary that works is worth more than a complicated system that sort of works

- Only split code when the pain of not splitting becomes real: hard to read, hard to test, hard to work on with others

- Do not refactor while reorganizing. Move the code first, verify the output is identical, then improve. One concern at a time

- Move to a Modular Monolith using an Hexagonal Architectureas soon as you decide to leave the initial mono file monolith. This is NOT over engineering, this is an investment.

- Traits define contracts. The application decides which methods it needs; the components fall in line. That is Dependency Inversion, and it costs almost nothing to set up

- Cargo workspaces are the sweet spot for most Rust projects. Independent builds, independent tests, one repository. Hard to beat

- Every architectural boundary we add (DLL, process, HTTP, broker) makes the system harder to debug. Serialization, network calls, and message routing are not free

- The business logic should never care about how it is called. Whether it runs inside a function, behind an FFI boundary, or on the other side of a NATS broker, 42 * 2 is still 84

- Process isolation buys crash containment. If a service panics, the rest of the system keeps running. That is worth something, but only if we actually need it

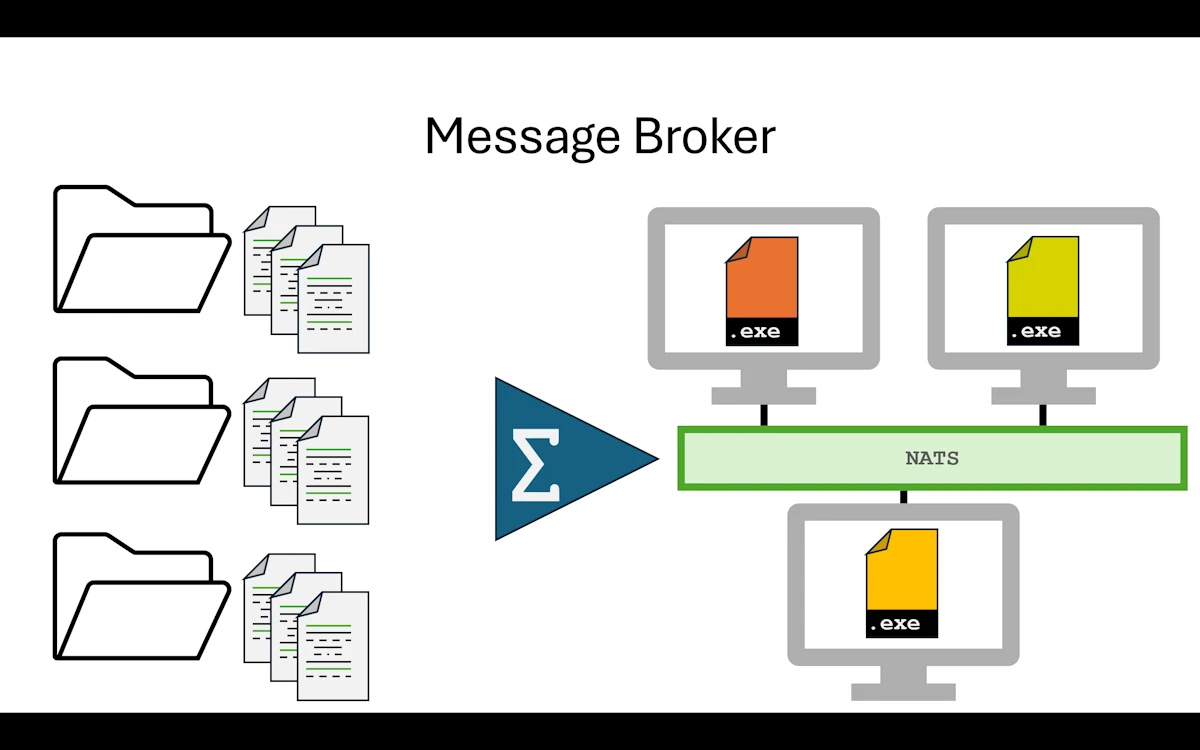

- HTTP gives us network transparency. A message broker gives us topology decoupling. Pick the simplest one that solves the actual problem

- There is no shame in stopping at Step 03. Most production software runs just fine as a well designed modular monolith.

Note The companion project with is available on GitHub.

Table of Contents

- Introduction

- Step 00: Single-file Monolith

- Step 01: Multi-file Monolith

- Step 02: Workspace Monolith

- Step 03: Trait-based Decoupling

- Step 04: Plugin DLL

- Step 05: Runtime DLL Loading

- Step 06: Multi-process via Pipes

- Step 07: Distributed HTTP

- Step 08: Message Broker with NATS

- Conclusion

- Webliography

Introduction

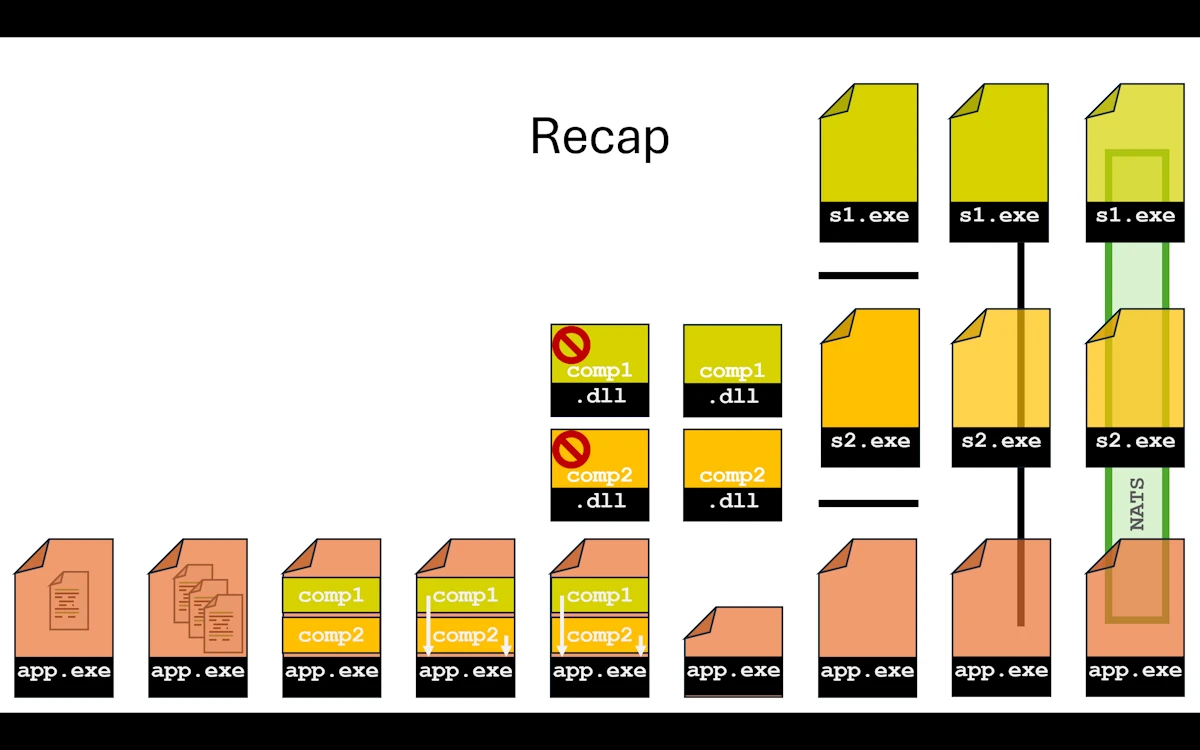

This article is a hands-on walkthrough. We start with a single Rust file and, step by step, we evolve it into a distributed system with independent services talking through a message broker. Nine steps, same business logic every time, zero big rewrites.

The business logic itself is deliberately boring: take an integer, double it, format the result as a string. That is on purpose. We want to focus on project organization and architecture, not on algorithms or clever tricks. Every step produces the exact same output (42 in, “Value-0084” out). What changes is how the code is structured, built, and deployed.

Here is the progression at a glance:

| Step | Architecture | Key Move |

|---|---|---|

| 00 | Single file | Baseline |

| 01 | Multiple files | Module system |

| 02 | Cargo workspace | Independent crates |

| 03 | Traits crate | Dependency inversion |

| 04 | DLL generation | Build artifact experiment |

| 05 | Runtime DLL loading | Plugin system via FFI |

| 06 | Separate processes | JSON over pipes |

| 07 | HTTP services | Network transparency |

| 08 | Message broker (NATS) | Full topology decoupling |

Each step builds on the previous one. We never throw everything away and start over. We refactor incrementally, one concern at a time, and at each stage we pause to understand what we gained, what it cost, and whether we even need to go further. That last point is important: most projects do not need to reach step 08. A well structured modular monolith (Step 02 or 03) is the right answer for the vast majority of use cases. We go all the way to a message broker not because everyone should, but because understanding the full spectrum helps us make better decisions about where to stop.

One recurring theme throughout: no premature refactoring. At each step, we resist the urge to “improve” things that are not broken. We move one piece, verify the output is identical, and only then consider the next move. This discipline is what makes incremental evolution safe.

A few practical notes before we begin:

- Error handling is minimal. We use

unwrap(),expect(), and?liberally. This is a teaching project, not a production codebase. Adding proper error types and recovery strategies would triple the code size and obscure the architecture lessons. We know it is there; we are choosing to keep the signal to noise ratio high. Seestep09_message_broker2for a project with more error handling. - Each step is a self-contained Cargo workspace. We can

cdinto any step folder and runcargo build,cargo test,cargo runindependently. No step depends on another. - The code is on GitHub. Each step folder contains a

QUICK_START.mdwith exact instructions for building and running.

Let’s get started.

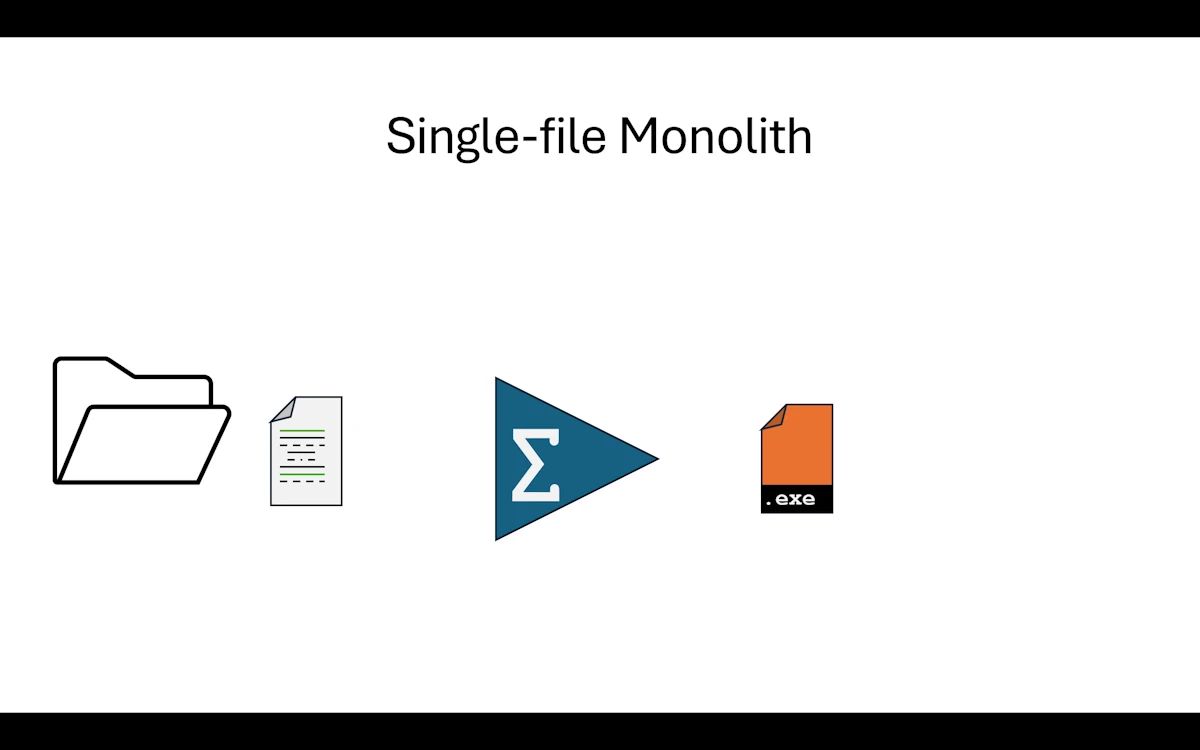

Step 00: Single-file Monolith

Single File Monolith

In the real world, picture all our code living in one single main.rs file. And we are not talking about a cute 50 line script. Think 500 lines, maybe more. At some point we stop enjoying reading our own code and we start worrying about breaking things every time we touch something. We have all been there.

Here we are just taking a snapshot of the situation. No judgment. We work with what we have. In this project the code itself is deliberately simple because we care more about project organization than business logic. But imagine the file is way longer than what we see here.

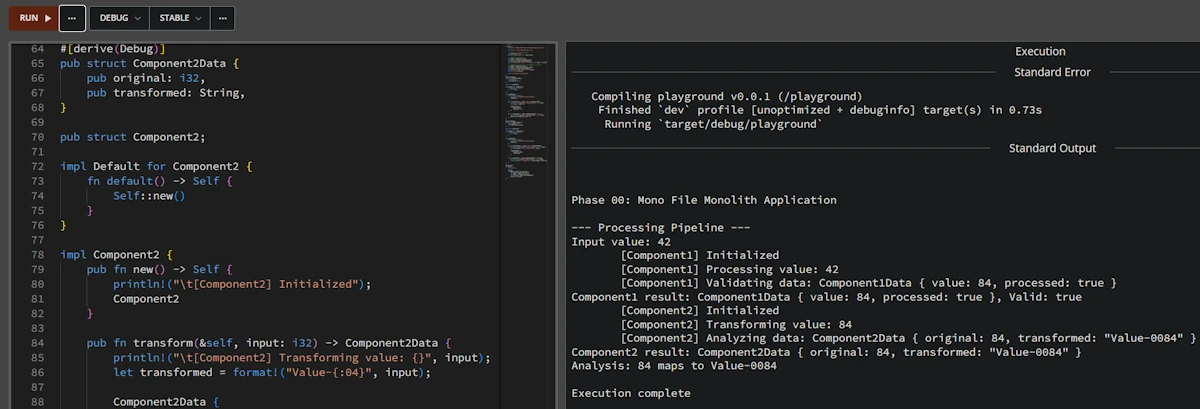

Show Me the Code

Download the project and open step00_monofile_monolith or read the code GitHub withing your browser. You can even copy/paste the code in Rust Playground.

fn main() {

println!("\n\nPhase 00: Mono File Monolith Application\n");

println!("--- Processing Pipeline ---");

let input_value = 42;

println!("Input value: {}", input_value);

let comp1 = Component1::new();

let data1 = comp1.process(input_value);

let is_valid = comp1.validate(&data1);

println!("Component1 result: {:?}, Valid: {}", data1, is_valid);

let comp2 = Component2::new();

let data2 = comp2.transform(data1.value);

let analysis = comp2.analyze(&data2);

println!("Component2 result: {:?}", data2);

println!("{}", analysis);

println!("\nExecution complete");

}

#[derive(Debug)]

pub struct Component1Data {

pub value: i32,

pub processed: bool,

}

pub struct Component1;

impl Default for Component1 {

fn default() -> Self {

Self::new()

}

}

impl Component1 {

pub fn new() -> Self {

println!("\t[Component1] Initialized");

Component1

}

pub fn process(&self, input: i32) -> Component1Data {

println!("\t[Component1] Processing value: {}", input);

let result = input * 2;

Component1Data {

value: result,

processed: true,

}

}

pub fn validate(&self, data: &Component1Data) -> bool {

println!("\t[Component1] Validating data: {:?}", data);

data.processed && data.value > 0

}

}

// Similar code for Component2Data + 1 local test

In main() we do the following:

- We set an

input_value(here 42, because of course it is 42) - We create a

Component1, callprocess()to double the value, thenvalidate()to check the result - We create a

Component2, calltransform()to format the previous result into a string, thenanalyze()to build a summary - We print everything and we are done

Below main() we find the implementation of both components with plenty of println! calls so we can follow what is happening at each step.

At the very bottom of the file, there are a few tests. Yes, they live in the same file too. That is part of the problem.

Expected output

Phase 00: Mono File Monolith Application

--- Processing Pipeline ---

Input value: 42

[Component1] Initialized

[Component1] Processing value: 42

[Component1] Validating data: Component1Data { value: 84, processed: true }

Component1 result: Component1Data { value: 84, processed: true }, Valid: true

[Component2] Initialized

[Component2] Transforming value: 84

[Component2] Analyzing data: Component2Data { original: 84, transformed: "Value-0084" }

Component2 result: Component2Data { original: 84, transformed: "Value-0084" }

Analysis: 84 maps to Value-0084

Execution complete

running 1 test

test test::negative_number_are_invalid ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Why This Step Matters

As hobbyists or beginners, we all know this situation. Either the app does what it needs to do and we can manage the 200 lines of code, or there is a little red light blinking in the back of our brain telling us that adding more stuff will eventually break everything.

Here is what we are dealing with right now:

- Touch one line of code and we have to recompile the whole thing

- Touch one test and we have to run all the tests because they live in the same source file. There is no way to separate them

- The source file is long. Really long. Scrolling around and finding what we need is getting painful

- Maybe a friend or two would like to help, but everyone would be editing the same file and we all know how that goes on GitHub. So we end up working alone

What Rust Gives Us Here

At this point, honestly, Rust gives us one thing: it compiles. The borrow checker is happy, cargo build passes, cargo test is green. That is already something. But the language has a lot more to offer when it comes to organizing code. We just are not using any of it yet.

When to Move On

If the code does the job and we are done with it, let’s not touch a thing. Let’s run cargo install and move on.

But if the application is not finished and we keep wanting to add “just one more little feature”, then it is time to look up from the keyboard and take advantage of everything Rust’s ecosystem and build system can offer.

Summary

We wrote code that works. That is awesome and not everyone can do it. But everything lives in a single file that is getting longer, harder to read, and scarier to modify. The fun factor is going down. And that is a problem, because coding should be fun. Time to split things up into smaller, more manageable pieces.

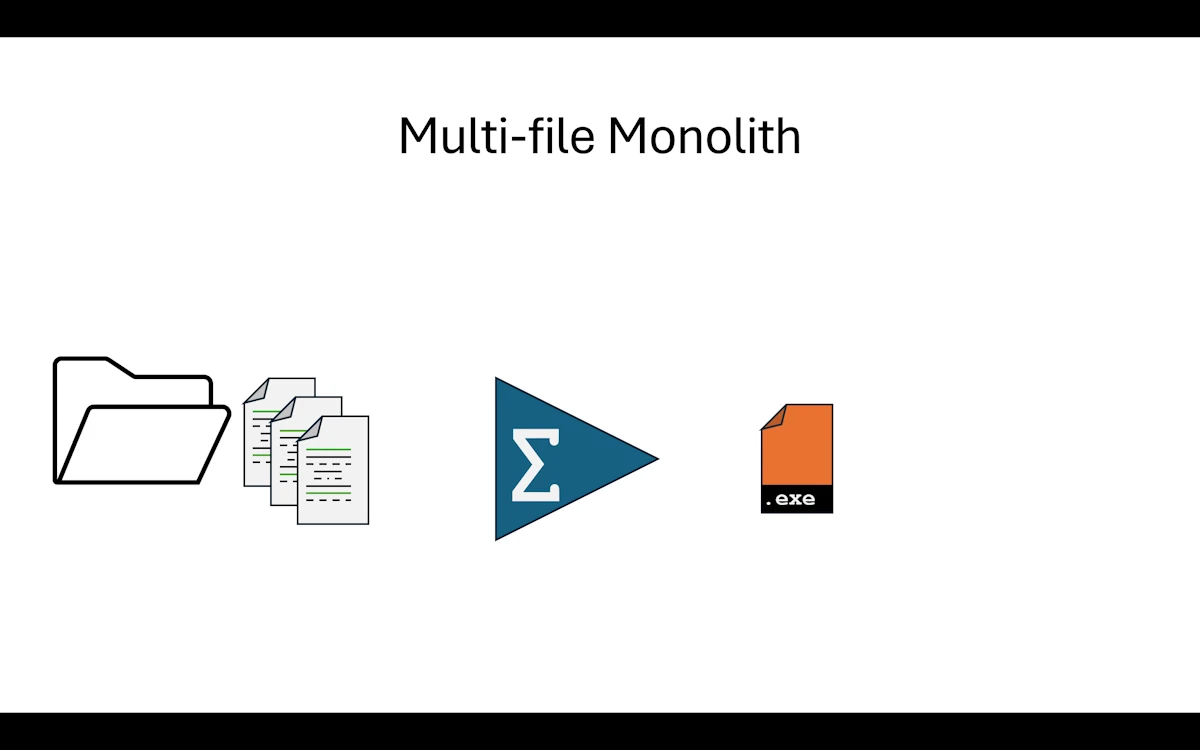

Step 01: Multi-file Monolith

Multi-file Monolith

What Changed from Step 00

The biggest change is that after splitting main.rs, we now have 3 files in the src/ directory instead of 1. And here is something reassuring: the Cargo.toml did not change at all. We reorganized the code and Rust figured out the rest on its own through the mod declarations. Zero config change.

step01_multifiles_monolith/

│ Cargo.toml

└───src

component1.rs

component2.rs

main.rs

Show Me the Code

Download the project and open step01_multifiles_monolith or read the code on GitHub withing your browser.

We keep only the main() function in main.rs. Then, and here it is pretty straightforward, we move everything related to Component1 into component1.rs and do the same with Component2 into component2.rs. In real life it is rarely this clean cut, but we have to start somewhere. Go for it, do not be afraid. In the worst case the Rust build system will bark at us and point us in the right direction.

Next, we go back to main.rs and at the very top we bring the component1 and component2 modules into scope. This part is important, so let’s make sure we have the right mental model here.

// main.rs

mod component1;

mod component2;

use component1::Component1;

use component2::Component2;

fn main() {

// Rest of the code

}

By splitting the code into 3 files we created 3 modules. The Rust build system will find them and build a module tree. Once it has a clear picture of how the modules relate to each other, it compiles everything together. This is not like C++ where each .cpp file is compiled independently into an .o and then linked. In Rust the compilation unit is the crate, not the file. With mod component1; and mod component2; at the top of main.rs, we are telling the build system: “in our crate, main.rs is the root and right below it sit component1 and component2.” There is a whole set of visibility, access, and re-export rules on top of that, but we are not there yet.

Follow the next link to learn much more about the Rust build system and get a better understanding of the module tree.

A word on compilation speed. We often hear that the Rust compiler is slow. That is partly true for a full rebuild from scratch. But

cargodoes incremental compilation at the crate level: if we only changecomponent2.rs, the compiler is smart enough to reuse cached work and not redo everything from zero. Right now with a single crate this does not help us much. But starting from Step 02, when we split into multiple crates in a workspace, incremental compilation really kicks in becausecargoonly rebuilds the crates that actually changed.

Then we shorten method calls using use component1::Component1;. Nothing fancy here, it is just a convenience. Try it: comment out the use component1::Component1; line and run cargo build. It will fail. To fix it we would have to write let comp1 = component1::Component1::new(); instead of let comp1 = Component1::new();. Again, it is purely a shorthand.

As for the contents of component1.rs and component2.rs, it is really just copy paste from the original main.rs.

Important: during this kind of exercise, this is not the time to refactor. Yes, we just copy pasted the code, it is shorter now, and we immediately spot something we want to improve. No, no, no my friend. Not now. Write it down on a piece of paper if you really want to (adding a // TODO: somewhere in the file is debatable). At the end of this step we want to be “iso functional” with step00. Same behavior, same output, zero surprises.

One more thing: let’s not go overboard with the splitting either. We do not want to end up with one function per file. At our level, whether we are hobbyists or early career developers, the big blocks to separate are usually pretty obvious.

Expected output

Do as I say, not as I do: in main() I updated the very first println! line. That is just for bookkeeping. Other than that, the terminal output is identical to Step 00.

Phase 01: Multi Files Monolith Application

--- Processing Pipeline ---

Input value: 42

[Component1] Initialized

[Component1] Processing value: 42

[Component1] Validating data: Component1Data { value: 84, processed: true }

Component1 result: Component1Data { value: 84, processed: true }, Valid: true

[Component2] Initialized

[Component2] Transforming value: 84

[Component2] Analyzing data: Component2Data { original: 84, transformed: "Value-0084" }

Component2 result: Component2Data { original: 84, transformed: "Value-0084" }

Analysis: 84 maps to Value-0084

Execution complete

On the testing side, for fun, I added a test in component2.rs. Again, do as I say, not as I do. I just want to show that things are already better than before: tests now live in the module they belong to.

running 2 tests

test component1::test::negative_number_are_invalid ... ok

test component2::test::test_transform ... ok

test result: ok. 2 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Why This Step Matters

From a day to day perspective, here is what changes:

- It is immediately nicer to work with a

main.rsthat has a reasonable size - Reviewing each component in isolation is, oddly enough, way more pleasant

- Adding tests inside each component file is easy and natural

- If our buddy Buck (yes, Buck Rogers himself) wants to help, he can. We tell him to work on

component1.rsand things should go smoothly on GitHub. On the build side, as long as the function signatures stay the same, we are good.

What Rust Gives Us Here

Three things at this step:

- Modules and the module tree. This is the big one. Understanding how the module tree works and having the right mental model is key. Each file under

src/becomes a module, andmoddeclarations inmain.rsdefine the tree structure - The

useshorthand. Withuse component1::Component1;we avoid repeating the full path everywhere. Small thing, but it makes the code much easier to read - Per module tests. Each

#[cfg(test)] mod testblock stays next to the code it tests. We runcargo testand each test clearly shows which module it belongs to in the output

When to Move On

What is a bit annoying is that if we add a test in the component we are working on, we either call it by name (cargo test negative_number_are_invalid), or we get creative with naming and filter with cargo test component1, or we just run everything every time. There is no way to truly build and test one component in isolation because we still have a single crate. That is what the next step will fix.

Summary

We split a monolithic file into 3 files and gained readability, easier code reviews, and per module tests. The Rust module system did the heavy lifting. But we still have one crate, one compilation unit, and no way to build or test a component independently. Time to bring in workspaces.

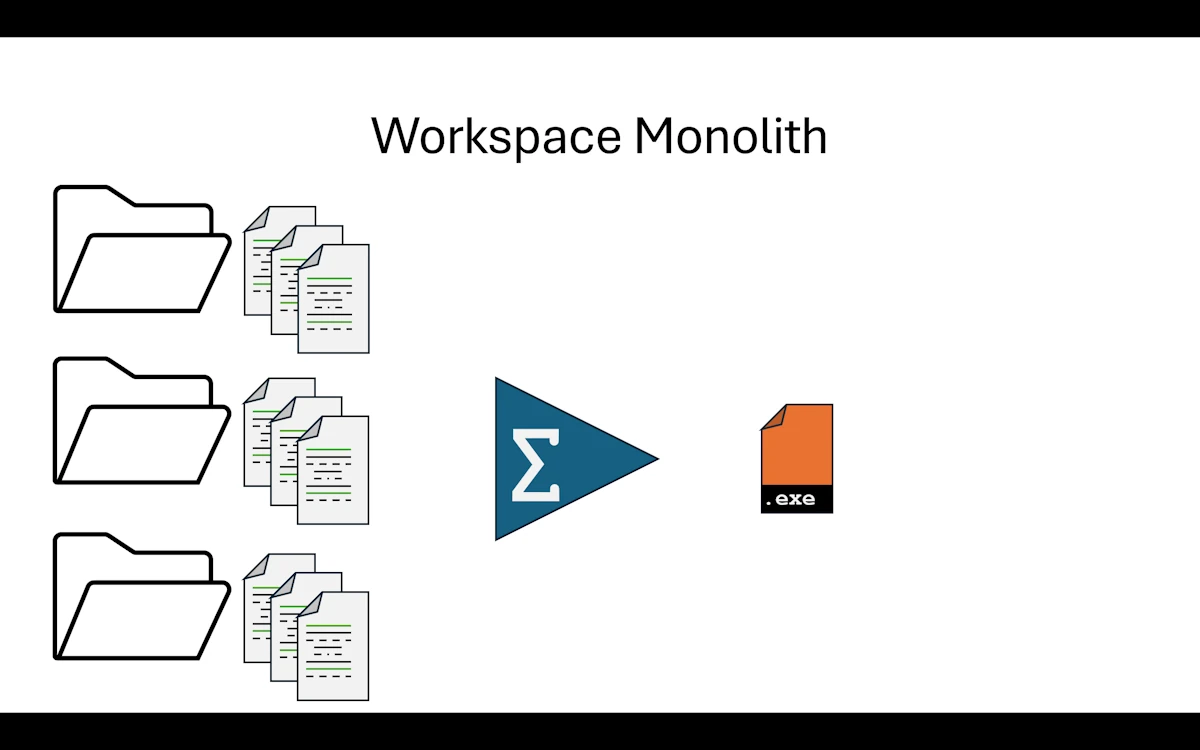

Step 02: Workspace Monolith

Workspace Monolith

What Changed from Step 01

Each component (component1, component2, and app) now lives in its own crate. All three crates are grouped together in a single Cargo workspace. Instead of pulling components from crates.io, we reference them locally through relative paths.

step02_modular_monolith/

│ Cargo.toml

├───app

│ │ Cargo.toml

│ └───src

│ main.rs

├───component1_lib

│ │ Cargo.toml

│ └───src

│ lib.rs

└───component2_lib

│ Cargo.toml

├───src

│ lib.rs

└───tests

component2_test.rs

Show Me the Code

Download the project and open step02_modular_monolith or read the code on GitHub withing your browser.

The Cargo.toml at the project root simply lists the workspace members.

Notice that we list directory names, not package names. It is easy to mix these up. When in doubt, think like the build system: before compiling anything, it needs to know where to find the ingredients. Directories answer “where”, package names answer “what”.

[workspace]

members = ["component1_lib", "component2_lib", "app"]

resolver = "3"

The application crate

The Cargo.toml for app declares a binary target called app (which becomes app.exe on Windows) with its source in src/main.rs.

Then comes the [dependencies] section. This is where it gets interesting. On the left side of the equals sign we write the name we want to use in our code (component1). On the right side, instead of specifying a version to fetch from crates.io like we usually do, we provide a relative path to the local crate ("../component1_lib"). That is the only difference with a “normal” dependency.

[package]

name = "app"

version = "0.1.0"

edition = "2024"

[[bin]]

name = "app"

path = "src/main.rs"

[dependencies]

component1 = { path = "../component1_lib" }

component2 = { path = "../component2_lib" }

In app/src/main.rs, we no longer need mod component1; declarations because the dependencies are already declared in Cargo.toml. This works exactly the same way as when we add serde or tokio to a project.

At the top of main() we show how to print the version numbers of the library crates.

Other than that, the rest of the code is identical to the previous step.

The library crates

These are now fully independent crates. Everything works as if we pulled them from crates.io. For instance, in their respective Cargo.toml files they can have different version numbers than the main application (and in fact component2 is already at 0.2.0 while component1 is at 0.1.0).

Inside each crate’s src/ directory, the source file is now called lib.rs instead of main.rs. The code is exactly the same as before, except for a get_version() function that returns the crate version using env!("CARGO_PKG_VERSION"). And of course, just like in the previous step, if a library crate grows too large we can split it into multiple modules.

Now here is a nice detail about testing.

- In

component1, the tests are inside the source file using#[cfg(test)] mod testswithuse super::*;. This is a unit test: it has access to everything in the module, including private items. - In

component2, the tests live in a separatetests/directory, which forces them to behave like an external client. The test file has to writeuse component2::Component2;because it can only access the public API. Both approaches have their place, and it is good to know the difference early on.

Take the time to break things. Seriously. Rename crates, mess up paths, swap directory names and package names, let the build system complain. Keep going until the relationship between crates, modules, directory names, package names, and the build system clicks in our head. This is one of those things that is much easier to learn by making mistakes than by reading documentation.

Expected output

Phase 02: Modular Monolith Application (main + 2 libs)

Component1 version: 0.1.0

Component2 version: 0.2.0

--- Processing Pipeline ---

Input value: 42

[Component1 Lib] Initialized

[Component1 Lib] Processing value: 42

[Component1 Lib] Validating data: Component1Data { value: 84, processed: true }

Component1 result: Component1Data { value: 84, processed: true }, Valid: true

[Component2 Lib] Initialized

[Component2 Lib] Transforming value: 84

[Component2 Lib] Analyzing data: Component2Data { original: 84, transformed: "Value-0084" }

Component2 result: Component2Data { original: 84, transformed: "Value-0084" }

Analysis: 84 maps to Value-0084

Execution complete

When we run cargo test, notice how each crate’s tests run independently. The build system compiles and tests them separately, and the output tells us exactly which crate each test belongs to.

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Running unittests src\lib.rs (...)

running 1 test

test tests::test_process ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Running unittests src\lib.rs (...)

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Running tests\component2_test.rs (...)

running 1 test

test test_transform ... ok

test result: ok. 1 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests component1_lib

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Doc-tests component2_lib

running 0 tests

test result: ok. 0 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

And now we can also do cargo test -p component1 or cargo test -p component2 to run tests for a single crate. That was the whole point.

Why This Step Matters

We just crossed an important threshold. At the end of the build we still get a single executable (a monolith), but it is composed of independent modules (modular). That is why this pattern is called a Modular Monolith.

We could honestly stop right here. A huge number of real world applications are built exactly this way and they work great.

What Rust Gives Us Here

The crate system is the big win. Each crate is its own little world where we reason locally. We are not polluted by the rest of the application. We have our code, our tests, and we live our life in our corner without bothering anyone.

- Independent builds. We can build each crate on its own with

cargo build -p component1. Remember the incremental compilation note from Step 01? This is where it pays off. Change one line incomponent2and only that crate gets rebuilt - Independent tests. We run

cargo test -p component1and only that crate’s tests execute. No more running the entire test suite every time - Independent versioning. Each crate has its own version number in its

Cargo.toml. Whencomponent2ships a breaking change, the team working oncomponent1can keep using the previous version until they are ready to upgrade

The workspace structure also reflects the architecture of the application. By looking at the directory listing, we can immediately tell what the app is made of. There is no framework imposing a folder structure on us. We organize things the way that makes sense for our project.

Things to Think About

- We split code into three crates, but everything still compiles into one binary. What would need to change if we wanted to deploy

service1andservice2independently? - Right now

appdepends on both library crates directly. What happens ifservice1andservice2start depending on each other? How would we prevent that? - We chose to put shared types (

ProcessInput,TransformInput, etc.) in each crate. At what point does it make sense to extract them into a shared crate? - If a teammate only touches

service2, they still rebuild the whole workspace by default. How could we speed up their inner dev loop?

When to Move On

Before moving away from a Modular Monolith, we are going to need some pretty serious arguments because this architecture has proven itself time and again.

There are two main reasons to consider going further: elasticity and scalability.

Elasticity is about handling temporary spikes in workload. Think of Amazon during Black Friday: the system needs to spin up extra capacity fast and scale back down when the rush is over. If one of our components is the bottleneck during those spikes, we might want to extract it from the monolith so we can duplicate it on demand.

Scalability is about a sustained mismatch between what we planned for and what we actually need. We designed the system for 100 users and it turns out we have 100_000. That might require more processing power, better geographic distribution, or a fundamentally different deployment strategy.

But let’s calm down for a second. We do not all have Netflix’s Friday night problems. At our level, as hobbyists or early career developers, a solid architecture (Hexagonal for example) wrapped in a Modular Monolith is more than enough for the vast majority of use cases. We should only move forward when we have measured a real bottleneck, not because it sounds cool.

Summary

We split our project into a Cargo workspace with 3 independent crates. We still produce a single binary, but each component can now be built, tested, and versioned on its own. This is the Modular Monolith pattern. Most of our applications will never need to go beyond this point. When they do, it is because of measured elasticity or scalability needs, not because of hype.

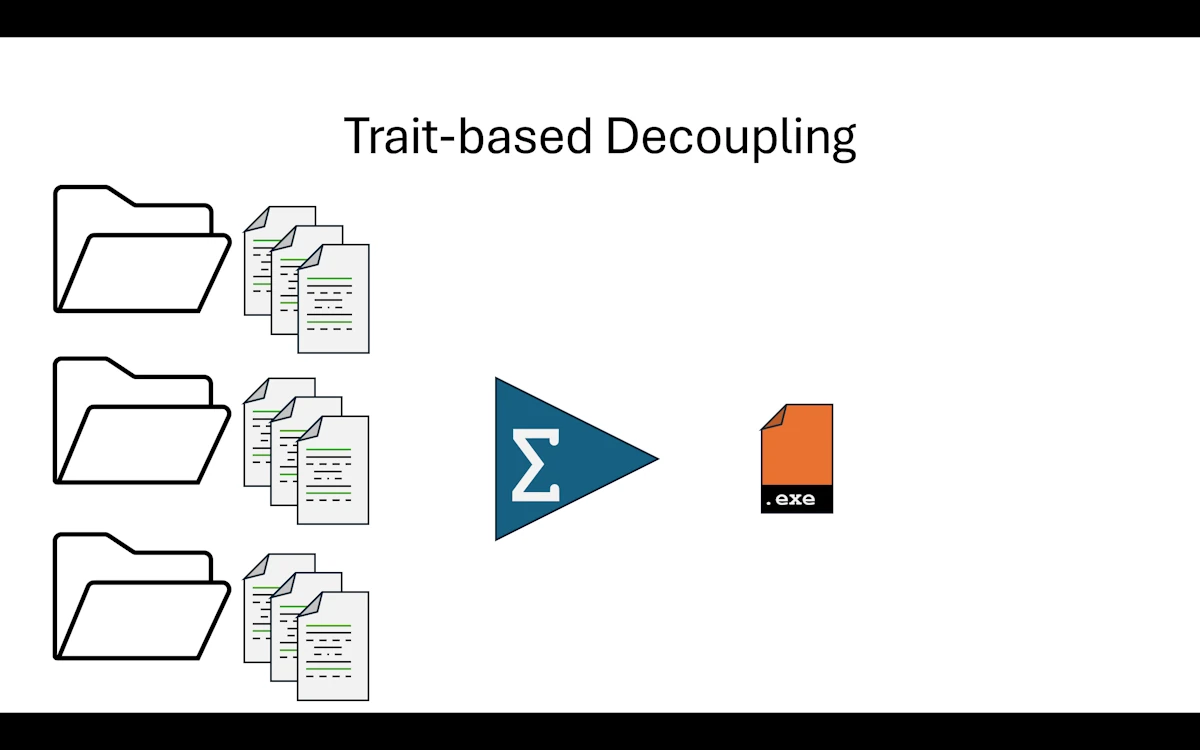

Step 03: Trait-based Decoupling

Trait-based Decoupling

Before reading further…

- If you want to dig deeper into SOLID and the Dependency Inversion Principle (DIP) in Rust, there is a dedicated page here.

- And follow the next link for a primer on Hexagonal Architecture

What Changed from Step 02

This step is more about code architecture than build organization. We create a new crate called traits that defines the methods our application has decided to use for processing and analyzing data. The components then implement those contracts instead of defining their own standalone methods.

Here is a summary of what changed:

| File | Change |

|---|---|

Cargo.toml (workspace) | Added traits to workspace members |

traits/src/lib.rs | NEW — Processor trait, Transformer trait, shared data types (ProcessResult, TransformResult) |

app/Cargo.toml | Added traits in [dependencies] |

app/src/main.rs | Imports traits. Adds run_pipeline(&dyn Processor, &dyn Transformer, i32) to demonstrate polymorphism |

component1_lib/Cargo.toml | Added traits in [dependencies] |

component1_lib/src/lib.rs | Component1Data removed, now uses ProcessResult from traits. Methods moved into impl Processor for Component1 |

component2_lib/Cargo.toml | Added traits in [dependencies] |

component2_lib/src/lib.rs | Component2Data removed, now uses TransformResult from traits. Methods moved into impl Transformer for Component2 |

Show Me the Code

Download the project and open step03_trait_interface or read the code on GitHub withing your browser.

In Step 02, app calls Component1 and Component2 methods directly. The app knows every concrete type and every method signature. If we swap an implementation, we have to change the app code too.

Here we introduce a traits crate that sits between the app and the component libraries. It defines two trait contracts:

Processorwithprocess(i32) -> ProcessResultandvalidate(&ProcessResult) -> boolTransformerwithtransform(i32) -> TransformResultandanalyze(&TransformResult) -> String

The data types (ProcessResult, TransformResult) also move into traits since they are part of the shared contract. The traits crate has zero dependencies. It contains no business logic whatsoever, only trait signatures and type definitions.

Notice that get_version() is a regular function on each component crate, not part of any trait. It is there purely for informational purposes. Not everything has to go through a trait. Only the methods that define the contract between the app and its components belong in a trait. Utility functions like get_version() can stay as plain public functions on the crate.

At the end of main() we call run_pipeline() which runs the exact same processing using trait references (&dyn Processor, &dyn Transformer). The idea is to show that the results are identical whether we call methods directly or through traits.

Try this: Open

app/src/main.rsand changeprocessor.process(input)toprocessor.blahblahblah(input). Build. The compiler tells us:error[E0599]: no method named `blahblahblah` found for reference `&dyn Processor` in the current scopeFair enough. Now go to

traits/src/lib.rsand renameprocesstoblahblahblahin theProcessortrait definition. Build again. This time it complains incomponent1’slib.rs: we are implementing aprocessmethod that is not part ofProcessoranymore, and we are missingblahblahblah.This little experiment shows something important. The application decides which methods it wants to call. If a component does not implement what the trait requires, the build fails. The component cannot impose anything on the application. And that is exactly what makes the

appresilient to changes in the components.

Expected output

Phase 03: Trait-Based Interface (shared contracts)

Component1 version: 0.1.0

Component2 version: 0.2.0

--- Processing Pipeline ---

Input value: 42

[Component1 Lib] Initialized

[Component1 Lib] Processing value: 42

[Component1 Lib] Validating data: ProcessResult { value: 84, processed: true }

Component1 result: ProcessResult { value: 84, processed: true }, Valid: true

[Component2 Lib] Initialized

[Component2 Lib] Transforming value: 84

[Component2 Lib] Analyzing data: TransformResult { original: 84, transformed: "Value-0084" }

Component2 result: TransformResult { original: 84, transformed: "Value-0084" }

Analysis: 84 maps to Value-0084

--- Trait-Based Pipeline ---

[Component1 Lib] Processing value: 42

Pipeline processed: ProcessResult { value: 84, processed: true }

[Component2 Lib] Transforming value: 84

Pipeline transformed: TransformResult { original: 84, transformed: "Value-0084" }

[Component2 Lib] Analyzing data: TransformResult { original: 84, transformed: "Value-0084" }

Pipeline analysis: Analysis: 84 maps to Value-0084

Execution complete

The tests are exactly the same as in Step 02.

Why This Step Matters

- Decoupling.

appcan now call components through&dyn Processorand&dyn Transformer. Therun_pipeline()function depends only on thetraitscrate, not on any concrete component. We could swapComponent1for a completely different implementation without touchingrun_pipeline - Dependency inversion. Components depend on the trait crate (upward), not the other way around. The

appalso depends on the trait crate. No component depends on another component. The arrows point inward, toward the contracts - Prepares for plugins. Steps 04 and 05 will turn components into DLLs. Having a clean trait boundary now makes that transition natural: the trait crate becomes the plugin interface, and each DLL just provides a different

impl

Dependency Graph

Take a moment to read the [dependencies] section of each Cargo.toml. At the end you should come with a picture similar to this one:

traits (defines Processor, Transformer, data types)

/ | \

component1 | component2 (each implements one trait)

\ | /

app (uses both through trait references)

Everyone depends on traits. Nobody depends on each other. That is the whole point.

What Rust Gives Us Here

Traits are the key ingredient here. But beyond the language feature itself, there is a method worth remembering:

- We start in

main.rsand write the function calls the way we want them to look. For example inrun_pipeline()we writeprocessor.process(input)because that reads well and makes sense - Only when we are happy with how things read in

main.rsdo we move on - Then we create the

traitscrate and define the trait signatures that match what we wrote inmain(). For example theProcessortrait with itsprocess()method - From the application’s point of view, we are done. We stated what we need. It is up to the components to fall in line

The crucial thing is that the application sets the tone. The app decides it will call process() on something that implements Processor (see fn run_pipeline(processor: &dyn Processor, ... in main.rs). It is not a library imposing its API on the application. The roles are reversed, and that is exactly why it is called the Dependency Inversion Principle.

Things to Think About

- Our traits have a single method each. What if

Processorneeded two methods (say,processandvalidate)? When does a trait become too fat? - We use

&dyn Trait(dynamic dispatch). What would change if we switched to generics (impl Trait)? What would we gain, and what would we lose? - The

appcrate now depends only on thetraitscrate, not on concrete implementations. Could we write tests forappusing mock implementations? What would that look like? - If we add a third operation (say,

compress) to the pipeline, how many crates do we need to touch? Is that number satisfying?

When to Move On

We need to protect the crown jewels. The core business logic of our application should be immune to changes happening in the components that orbit around it. With traits in place, it is.

My personal take? As soon as the crates from Step 02 are in place, setting up dependency inversion with traits should be the very next move. Do not wait.

And if components use sub-components of their own, the same principle applies: create trait boundaries at their level too. Traits all the way down.

Summary

The business logic is identical to Step 02. Same input (42), same output (“Value-0084”). What changed is the architecture: run_pipeline() uses dynamic dispatch (&dyn Trait) and the traits crate has zero dependencies, it is a pure contract definition. The app now decides the API, and components implement it. We are ready for plugins.

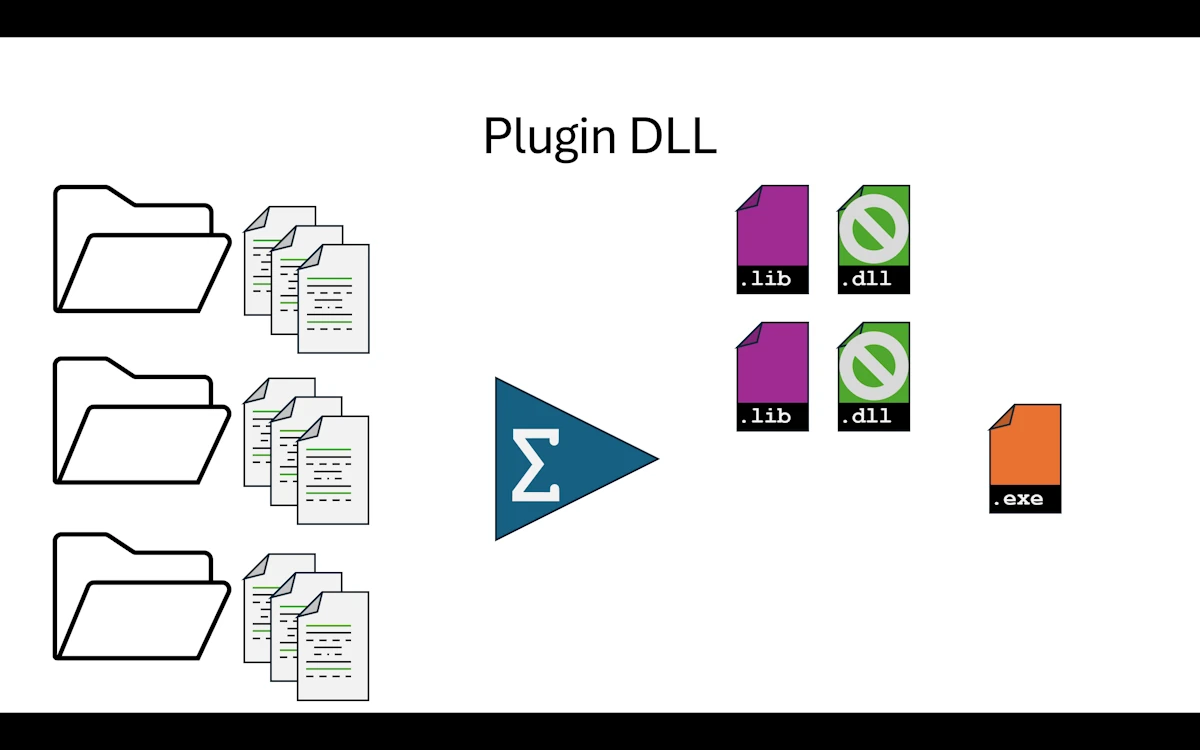

Step 04: Plugin DLL

Plugin DLL

What Changed from Step 03

The component directories are now named component1_dll and component2_dll instead of component1_lib and component2_lib, to reflect that they produce dynamic libraries. The package names in Cargo.toml stay component1 and component2 as before.

step04_plugins_dll/

│ Cargo.toml

├───app

│ │ Cargo.toml

│ └───src

│ main.rs

├───component1_dll

│ │ Cargo.toml

│ └───src

│ lib.rs

├───component2_dll

│ │ Cargo.toml

│ ├───src

│ │ lib.rs

│ └───tests

│ component2_test.rs

└───traits

│ Cargo.toml

└───src

lib.rs

Ideally we just add one section to each component’s Cargo.toml:

[lib]

crate-type = ["cdylib", "rlib"]

And that is it. This tells Cargo to produce two artifacts for each component:

| Artifact | Format | Purpose |

|---|---|---|

component1.rlib | Rust static library | Used by cargo build to link into app.exe |

component1.dll | Windows dynamic library | Available for external consumers (C, C++, Python, other Rust binaries…) |

We also simplified app/src/main.rs to use only run_pipeline(), making our core business logic immune to changes in the components. This is the natural evolution from what we set up in Step 03.

Show Me the Code

Download the project and open step04_plugins_dll or read the code on GitHub withing your browser.

Here is the new version of main(). The code is familiar by now.

use component1::Component1;

use component2::Component2;

use traits::{Processor, Transformer};

fn main() {

println!("\n\nPhase 04: Modular Application with Plugins (1 exe + 2 dll)\n");

println!("Component1 version: {}", component1::get_version());

println!("Component2 version: {}", component2::get_version());

let input_value = 42;

let comp1 = Component1::new();

let comp2 = Component2::new();

run_pipeline(&comp1, &comp2, input_value);

println!("\nExecution complete");

}

fn run_pipeline(processor: &dyn Processor, transformer: &dyn Transformer, input: i32) {

println!("\n--- Processing Pipeline ---");

println!("Input value: {}", input);

let data1 = processor.process(input);

let is_valid = processor.validate(&data1);

println!("Component1 result: {:?}, Valid: {}", data1, is_valid);

let data2 = transformer.transform(data1.value);

let analysis = transformer.analyze(&data2);

println!("Component2 result: {:?}", data2);

println!("{}", analysis);

}

One thing worth noticing: run_pipeline() is now a clear, self-contained function that only depends on traits. It is a natural candidate for moving into app/src/lib.rs. That would let’s create an app/tests/ directory with proper integration tests for the full application pipeline.

For now, I added a simple test that verifies the pipeline does not panic. It is not visible in the snippet above but it is there in the source.

Expected output

Phase 04: Modular Application with Plugins (1 exe + 2 dll)

Component1 version: 0.1.0

Component2 version: 0.2.0

[Component1 Lib] Initialized

[Component2 Lib] Initialized

--- Processing Pipeline ---

Input value: 42

[Component1 Lib] Processing value: 42

[Component1 Lib] Validating data: ProcessResult { value: 84, processed: true }

Component1 result: ProcessResult { value: 84, processed: true }, Valid: true

[Component2 Lib] Transforming value: 84

[Component2 Lib] Analyzing data: TransformResult { original: 84, transformed: "Value-0084" }

Component2 result: TransformResult { original: 84, transformed: "Value-0084" }

Analysis: 84 maps to Value-0084

Execution complete

The tests are similar to what we already know.

Why This Step Matters

This step is mostly interesting intellectually because we do not actually get what we want: one executable loading automatically 2 DLLs at runtime.

What? Really? How can it works? In fact, when we run cargo build, Cargo produces both .rlib and .dll files. But when it links app.exe, it uses the .rlib (static library), not the .dll.

We can verify this ourselves:

cd target/debug

mv component1.dll component1.dll.bak

mv component2.dll component2.dll.bak

.\app.exe # runs perfectly

The .exe is self-contained. The component code is baked into it, just like in Step 03. The DLLs sit in the build folder, unused.

Why does Cargo use rlib instead of the DLL?

Because app/Cargo.toml says:

[dependencies]

component1 = { path = "../component1_dll" }

This is a Rust-to-Rust dependency. Cargo always prefers rlib for these because:

- Rust’s ABI (Application Binary Interface) is unstable and can change between compiler versions

- Static linking is simpler and faster

rlibpreserves full type info, generics, and monomorphization

The cdylib output exists for foreign consumers (C, Python, etc.) that load the DLL via FFI. Rust itself never uses it through a path dependency.

So why does this step exist?

To be clear, I did not expect this behavior. So now I consider this step is a preparation step. It demonstrates that:

- We can tell Cargo to produce DLLs alongside the static library

- The DLLs are real, loadable dynamic libraries (Step 05 will actually use them)

- The

appcode does not change at all. The transition is invisible

Think of it as: our components are now capable of being DLLs, even though nobody is loading them dynamically yet.

What Rust Gives Us Here

The crate-type setting in Cargo.toml lets us produce dynamic libraries that can be shared with other languages: C, C++, Python, and more. One line of config and Cargo handles the rest.

Worth noting that “DLL” here is the Windows terminology. On Linux the equivalent is .so (shared object), on macOS it is .dylib. Cargo produces the right format for whatever platform we are building on.

Things to Think About

- We generate DLL files but never load them. In what scenario would generating both

rlibandcdylibactually matter in a real project? - The DLL and the

rlibcontain the same logic. If we ship the DLL to someone else, can they use it from a different Rust version? From C? From Python? - We did not change a single line of business logic in this step. Is that a strength or a sign that this step is pointless? What is the value of a step that only changes build artifacts?

- If our goal is runtime plugin loading (next step), what contract do we need between the host and the plugin? Where should that contract live?

When to Move On

Honestly, this step is one to understand but not to linger on. Now that we know how it works, let’s move to Step 05 where we actually load DLLs at runtime. That is where things get real.

Summary

We added crate-type = ["cdylib", "rlib"] to our component crates. Cargo now produces both a static library and a DLL for each component, but the application still links statically via rlib. The DLLs are generated but unused. This step is a stepping stone: it proves our components can be packaged as dynamic libraries. Step 05 will close the loop by loading them at runtime with libloading.

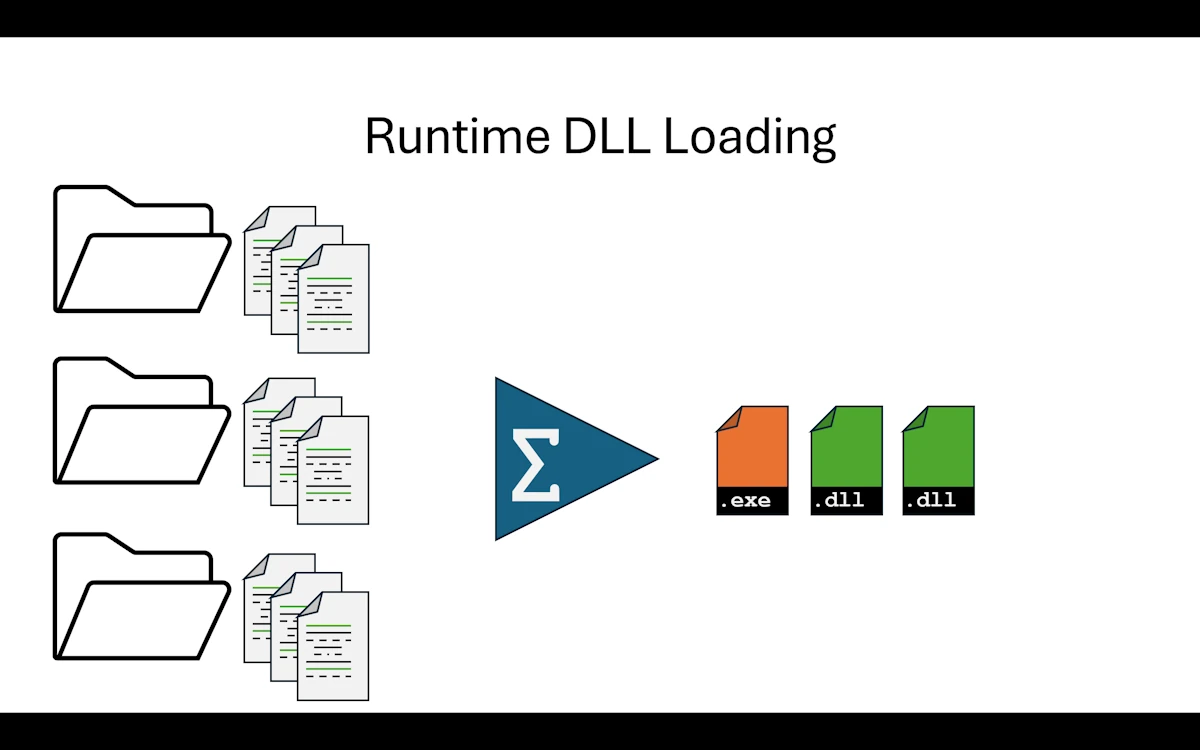

Step 05: Runtime DLL Loading

Runtime DLL Loading

What Changed from Step 04

In Step 04 we told Cargo to produce DLLs alongside the static libraries. At the end, the DLLs were sitting in the build folder, nice and shiny, but nobody was actually loading them. The app was still statically linked through rlib. This time, we close the loop.

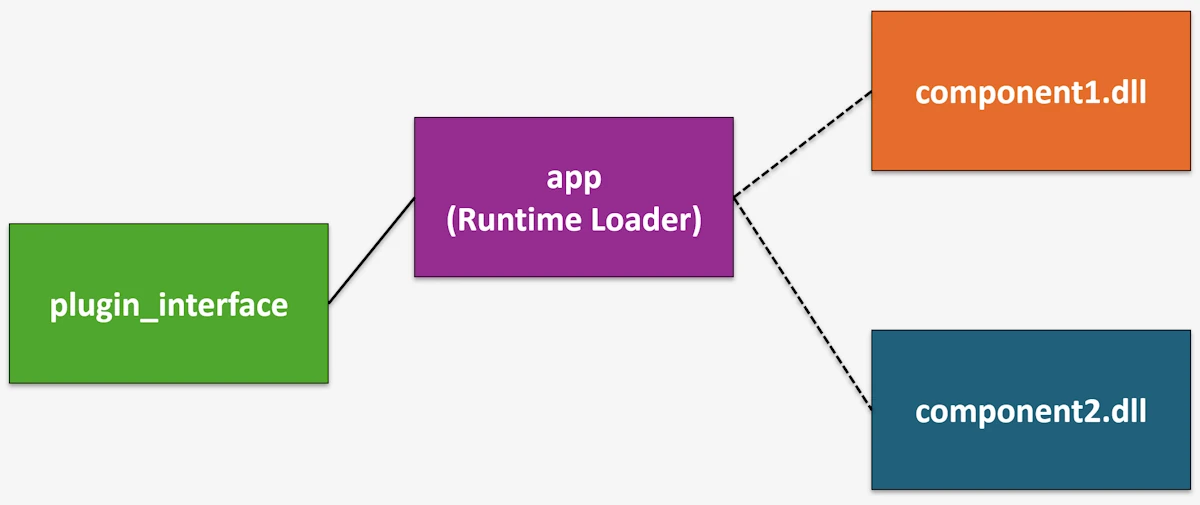

The traits crate is gone. In its place we have a brand new crate called plugin_interface. It still defines the trait contracts (ProcessPlugin, TransformPlugin) and the shared data types (ProcessResult, TransformResult), but it also defines the C-compatible function pointer types and the symbol names that DLLs must export. Think of it as the previous version of the traits crate plus a “DLL protocol” on top.

The component crates now produce only cdylib (no more rlib). The app crate no longer lists component1 or component2 in its [dependencies]. Instead, it depends on plugin_interface and on libloading, a Rust crate that wraps the OS functions for loading shared libraries at runtime (LoadLibrary/GetProcAddress on Windows, dlopen/dlsym on Linux and macOS).

Here is a summary of what changed:

| File | Change |

|---|---|

Cargo.toml (workspace) | traits removed, plugin_interface added. component1_dll and component2_dll remain |

plugin_interface/Cargo.toml | NEW crate, crate-type = ["lib"], zero dependencies |

plugin_interface/src/lib.rs | Traits, data structs, FFI type aliases (ProcessPluginCreate, etc.), symbol name constants |

component1_dll/Cargo.toml | crate-type changed from ["cdylib", "rlib"] to ["cdylib"] only. Depends on plugin_interface |

component1_dll/src/lib.rs | Implements ProcessPlugin. Exports extern "C" factory functions: _plugin_create, _plugin_destroy, _plugin_version |

component2_dll/Cargo.toml | Same as component1: cdylib only, depends on plugin_interface |

component2_dll/src/lib.rs | Implements TransformPlugin. Same three exported symbols |

app/Cargo.toml | No longer depends on component1 or component2. Depends on plugin_interface and libloading |

app/src/main.rs | Loads each DLL at runtime, looks up symbols, creates plugin instances, calls trait methods, destroys instances, unloads DLLs |

Show Me the Code

Download the project and open step05_dyn_plugins_dll or read the code on GitHub within your browser.

The plugin interface crate

This is the contract between the host (app) and the plugins (the DLLs). It defines:

ProcessResultandTransformResult, both marked#[repr(C)]so their memory layout is fixed and predictable across the DLL boundaryProcessPluginandTransformPlugintraits, same as in Step 03/04, but now withSend + Syncbounds- Type aliases for the FFI function pointers:

ProcessPluginCreate,ProcessPluginDestroy,TransformPluginCreate,TransformPluginDestroy, andPluginVersion - Three constants for symbol names:

PLUGIN_CREATE_SYMBOL,PLUGIN_DESTROY_SYMBOL,PLUGIN_VERSION_SYMBOL

Notice that the crate has zero external dependencies. It is a pure contract definition, exactly like traits was in Step 03. The difference is that it now also describes how to cross the DLL boundary.

One detail worth pausing on: the #[expect(improper_ctypes_definitions)] attributes on the function pointer types. The Rust compiler warns us that passing trait object pointers (*mut dyn ProcessPlugin) through extern "C" functions is not standard FFI. And it is right, this is technically unsafe territory. We silence the warning with #[expect(...)] because we control both sides of the boundary and we know the trait object layout will be consistent (same compiler, same build). This would absolutely not work if the DLL was compiled with a different Rust version. More on that later.

The component DLLs

Each component DLL exports exactly three symbols:

_plugin_create()allocates a new instance of the component on the heap usingBox::into_raw(Box::new(...))and returns it as a raw pointer to the trait object_plugin_destroy(ptr)takes that pointer back, wraps it in aBox::from_raw(), and drops it. This is how we free the memory_plugin_version()returns a pointer to a null-terminated C string containing the crate version, built at compile time withconcat!(env!("CARGO_PKG_VERSION"), "\0")

All three functions are extern "C" and marked #[unsafe(no_mangle)] so that libloading can find them by name in the DLL’s export table.

The Component1 and Component2 structs themselves are private to their respective crates. The only thing that leaks out is the trait implementation, through the factory functions. From the outside, we never see Component1 or Component2. We only see a *mut dyn ProcessPlugin or *mut dyn TransformPlugin.

The business logic is identical to all previous steps. process() doubles the value, transform() formats it as "Value-0084", and so on. No surprises there.

The host application

app/Cargo.toml now looks like this:

[dependencies]

plugin_interface = { path = "../plugin_interface" }

libloading = "0.9"

Notice what is missing: component1 and component2. The app does not know about them at compile time. It only knows the plugin interface. The components are discovered and loaded at runtime.

In main.rs, the loading sequence for each plugin follows the same pattern:

- Build the DLL path relative to the running executable using

plugin_path(). This helper figures out the right extension (.dll,.so, or.dylib) depending on the target OS - Load the DLL with

Library::new(&path). This is the equivalent ofLoadLibraryon Windows - Look up the

_plugin_versionsymbol, call it, convert the returned*const c_charto a Rust string, and print it - Look up the

_plugin_createsymbol, call it to get a trait object pointer, and dereference it to get a&dyn ProcessPlugin(or&dyn TransformPlugin) - Call trait methods just like in Step 03. The syntax is exactly the same:

plugin.process(input_value),plugin.validate(&result) - Look up the

_plugin_destroysymbol and call it to free the plugin instance - Let

libgo out of scope, which unloads the DLL

The entire component1 block is wrapped in a { ... } scope so that lib (the loaded DLL) is dropped (and thus unloaded) before we move on to component2. We get the process_result out of that scope and pass it to component2, exactly as before.

Everything is wrapped in unsafe blocks with detailed // SAFETY comments. There are a lot of them. That is not sloppy code. That is how FFI works in Rust: every call across the DLL boundary is inherently unsafe, and we are expected to document why we believe each call is sound.

Dashed lines indicate runtime discovery using LoadLibrary/libloading

Note About the Address Space

Since DLLs are loaded into the host process, they share the same virtual address space as app.exe. A pointer obtained inside the DLL is valid inside the app and vice versa. This is fundamentally different from what we will see in Step 06, where each service runs in its own process with its own isolated address space.

+---------------------------------------------------------+

| Process Virtual Address Space |

| |

| 0x00007FF6_00000000 app.exe code + data |

| 0x00007FFB_10000000 component1.dll |

| 0x00007FFB_20000000 component2.dll |

| 0x00007FFB_80000000 kernel32.dll, ntdll.dll, ... |

| 0x000000A0_00000000 heap |

| 0x000000FF_FFFFFFFF stack (grows down) |

| |

+---------------------------------------------------------+

This means we can pass raw pointers between the app and the DLLs and they just work. The only catch is memory allocation: if the DLL allocates memory, the DLL should free it (and vice versa). Different allocator instances do not play well together even within the same address space. That is exactly why we have _plugin_create and _plugin_destroy as a matched pair.

| Boundary | Same address space? | Can share pointers? | Communication |

|---|---|---|---|

| Function call (Step 02) | Yes | Yes | Direct |

| DLL in same process (Step 04/05) | Yes | Yes* | Function call via FFI |

| Separate process (Step 06) | No | No | Pipes, HTTP, NATS… |

* Careful with allocators: if the DLL and the exe use different allocator instances, free memory on the same side that allocated it.

Expected output

Phase 05: Modular Application with Dynamic Plugins (runtime DLL load/unload)

--- Processing Pipeline ---

Input value: 42

Loading component1 DLL from: <path_to_exe_dir>\component1.dll

Component1 DLL loaded

Component1 version: 0.1.0

[Component1 DLL] Initialized

[Component1 DLL] Processing value: 42

[Component1 DLL] Validating data: ProcessResult { value: 84, processed: true }

Process result: ProcessResult { value: 84, processed: true }, Valid: true

[Component1 DLL] Destroying plugin instance

Unloading Component1 DLL...

Loading component2 DLL from: <path_to_exe_dir>\component2.dll

Component2 DLL loaded

Component2 version: 0.2.0

[Component2 DLL] Initialized

[Component2 DLL] Transforming value: 84

[Component2 DLL] Analyzing data: TransformResult { original: 84, transformed: "Value-0084" }

Transform result: TransformResult { original: 84, transformed: "Value-0084" }

Analysis: 84 maps to Value-0084

[Component2 DLL] Destroying plugin instance

Unloading component2 DLL...

Execution complete

The DLL path will of course be different on your machine. The rest is identical to every previous step: 42 goes in, gets doubled to 84, gets formatted as “Value-0084”. Business logic has not changed one bit.

On the testing side, each component DLL crate has its own unit tests. We can run cargo test -p component1 or cargo test -p component2 as usual. The tests exercise the trait implementations directly, no DLL loading involved. This is one of the nice properties of the design: we test the logic in isolation and only involve FFI when we run the full app.

running 2 tests

test tests::test_process ... ok

test tests::test_validate ... ok

test result: ok. 2 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

running 2 tests

test tests::test_analyze ... ok

test tests::test_transform ... ok

test result: ok. 2 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Why This Step Matters

This is the step where we go from “our components could be DLLs” to “our components are DLLs and the app loads them at runtime.”

- True decoupling at the binary level. The

appcrate does not depend oncomponent1orcomponent2at compile time. We can rebuild a component DLL, drop it next toapp.exe, and run again without recompiling the app. That is the plugin promise - Hot-swappable components (in theory). Because we explicitly load and unload DLLs, nothing prevents us from loading a different DLL that exports the same symbols. As long as the new DLL implements the same plugin interface, the

appwill not care. We could even load plugins from a configuration file or a plugin directory - The cost is

unsafe. We are leaving the safe harbor of the Rust type system. Every symbol lookup, every pointer dereference across the DLL boundary isunsafe. If we get the types wrong, if we forget to call_plugin_destroy, if we use a plugin pointer after the DLL is unloaded, we get undefined behavior with zero compiler help. This is the fundamental tradeoff

Worth knowing: the approach we use here (passing Rust trait object pointers across the DLL boundary) is not standard FFI practice. It works because we compile both sides with the same Rust compiler. If we needed true cross-language compatibility, we would define a C-compatible vtable manually or use a proper C API with opaque handles. What we have here is more of a “Rust-to-Rust plugin system” than a general purpose FFI layer.

What Rust Gives Us Here

Rust does not make DLL loading safe. It makes it explicit. Every unsafe block screams “something risky is happening here, and I have thought about why it is OK.” That might seem like a burden, but compare it to C/C++ where LoadLibrary + GetProcAddress is just normal code with no special warnings. In Rust, the compiler forces us to acknowledge each danger point.

Beyond that, a few things are really helpful:

libloadingcrate. Wraps the OS-specific dynamic loading APIs (LoadLibrary/dlopen) into a single cross-platform interface. We write one codebase and it runs on Windows, Linux, and macOS. Theplugin_path()helper inmain.rshandles the file extension differencesBox::into_raw/Box::from_raw. This is how we transfer ownership of heap-allocated objects across the FFI boundary.Box::into_rawgives up Rust’s ownership tracking and hands us a raw pointer.Box::from_rawtakes it back. The matched_plugin_create/_plugin_destroypattern ensures no memory leaks#[unsafe(no_mangle)]andextern "C". These two attributes are what make a Rust function visible and callable from outside.no_manglepreserves the symbol name (Rust normally mangles names), andextern "C"uses the C calling convention solibloadingcan find and call the functionrepr(C)on data structs. Ensures the struct layout matches what a C compiler would produce. Without this, Rust is free to reorder fields or add padding however it likes, which would break things across the DLL boundary

Things to Think About

- Every FFI call in this step requires

unsafe. If a DLL has a bug that corrupts memory, what happens to the host process? How does this compare to a bug in a statically linked library? - We must compile the DLL and the host with the exact same Rust toolchain version. What would happen if we upgraded

rustcfor the host but forgot to rebuild the DLLs? - Could we design a plugin system where third parties ship DLLs for our host? What extra guarantees would we need beyond what we have here?

- If the host process crashes because of a faulty plugin, all other plugins go down too. How could we get crash isolation without giving up dynamic loading entirely?

When to Move On

Dynamic loading is a powerful tool but it comes with real constraints. The biggest one in our case: we must compile the DLLs and the host app with the same Rust compiler version. Rust does not have a stable ABI, which means the trait object layout (vtable pointer + data pointer) can change between compiler releases. If we compile the app with Rust 1.82 and a DLL with Rust 1.83, we might get silent corruption.

For a plugin system where we control all the pieces and ship everything together, this is fine. For a plugin system where third parties ship DLLs compiled independently, this is a real problem. In that case, we would need a C-based plugin API with a stable ABI.

The other thing to consider is that we are still running everything in a single process. If a DLL crashes (segfault, stack overflow, panic that crosses the FFI boundary), it takes down the whole application. There is no isolation.

If either of these concerns is an issue for you (as it is for me), the next logical step is to move components into their own processes. That is Step 06, where components become standalone executables communicating through pipes. Different address spaces, full crash isolation, and no ABI compatibility worries because data crosses the boundary as serialized JSON, not raw pointers.

Summary

We replaced static linking with runtime DLL loading using libloading. The plugin_interface crate defines the contract: traits, data types, FFI function signatures, and symbol names. Each component DLL exports three extern "C" functions (_plugin_create, _plugin_destroy, _plugin_version) and the host app loads them by name at runtime. The business logic is unchanged (42 in, “Value-0084” out). We gained true binary-level decoupling and the ability to swap plugins without recompiling the host. The price is unsafe code at every DLL boundary crossing and the requirement that everything be compiled with the same Rust toolchain.

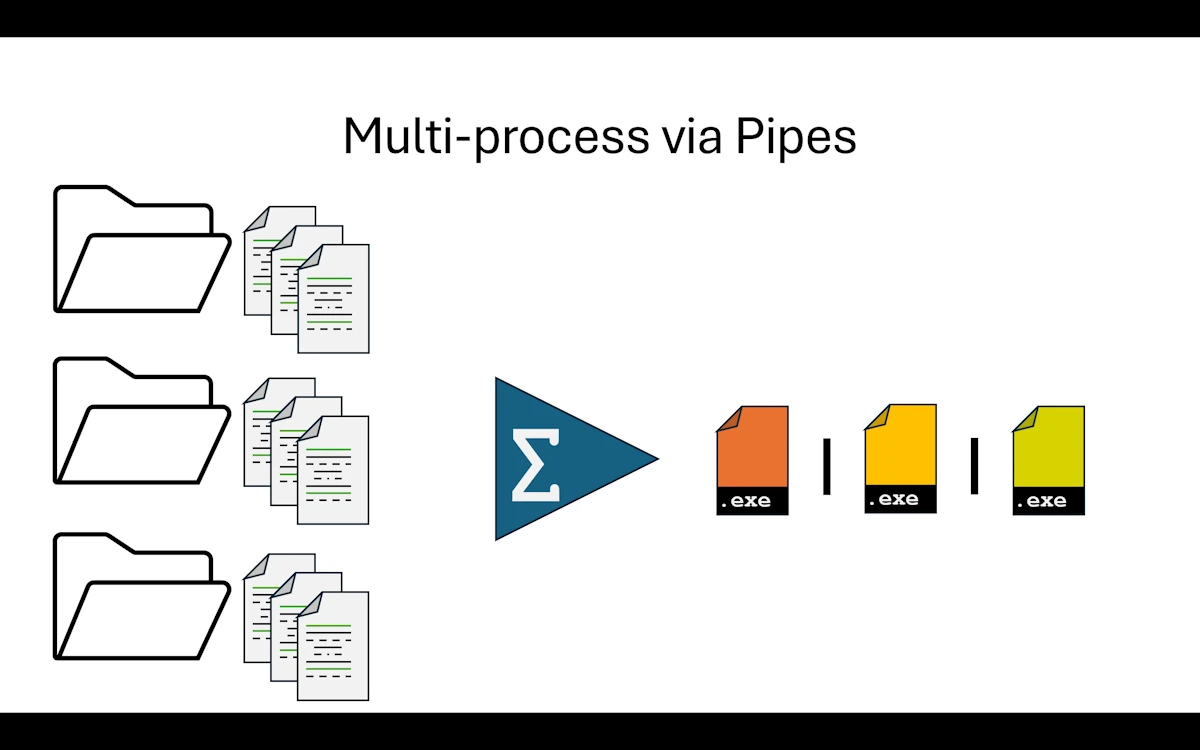

Step 06: Multi-process via Pipes

Multi-process via Pipes

What Changed from Step 03

Wait, Step 03? Yes. We are comparing against Step 03, not Step 05. Here is why. Step 04 was an experiment where we asked Cargo to produce DLLs but ended up still linking statically through rlib. Step 05 went all the way and loaded DLLs at runtime using libloading. Both steps were valuable learning experiences, but the DLL path is a niche pattern in the Rust ecosystem. So we rewind to Step 03 (the trait-based modular monolith) and take a completely different fork in the road.

In Step 05, all components lived in the same address space. If a DLL crashed, the whole application went down with it. This time, we split the application into separate processes, each with its own isolated address space. If a service crashes, the orchestrator can detect it and react. No more shared memory, no more raw pointers, no more unsafe.

The vocabulary changes too. We no longer talk about “components.” From now on, they are services. We still run everything on the same machine, but each service is its own executable with its own main().

To keep things simple, the main application (we kept the name app in the code, though it really acts as an orchestrator) spawns both services as child processes. It does not expect them to be already running somewhere. It starts them, talks to them, and shuts them down.

The big mental shift is this: we can no longer call functions directly. There are no library crates to link against, no DLLs to load. Instead, we set up a message protocol. The orchestrator sends JSON messages through stdin pipes, and the services reply through stdout pipes. Data travels as serialized text, not as in-memory structs.

We kept the get_version() pattern from the DLL experiments because it is genuinely useful: the orchestrator can ask each service for its version right after startup, which is handy for logging and debugging.

One more thing about the app crate: we display [App] in the terminal, but mentally we should think “orchestrator.” We could rename it, but for consistency across the series we kept the same crate name.

Now, about the traits we introduced in Step 03. They were a big deal back then because they defined the contract between app and its components. Here, traits become less central. The contract is now defined by the message types in common/src/lib.rs. The Message enum lists every possible exchange between the orchestrator and the services: Process, ProcessResult, Transform, TransformResult, GetVersion, VersionResult, Shutdown. If we think about it, the message definitions play the exact same role as the trait definitions did in Step 03. They describe what each service can do and what data it expects. The enforcement mechanism is different (runtime JSON parsing instead of compile-time trait bounds), but the intent is identical.

Show Me the Code

Download the project and open step06_multi_process or read the code on GitHub within your browser.

The common crate (message protocol)

The common crate is the shared vocabulary between the orchestrator and the services. Its Cargo.toml depends on serde and serde_json, nothing else. Inside lib.rs, we find the request/response structs (ProcessRequest, ProcessResponse, TransformRequest, TransformResponse, VersionResponse) and the Message enum that wraps them all.

All the structs derive Serialize and Deserialize so they can travel as JSON over the pipes. The Message enum also provides two helper methods: to_json() for serialization (using unwrap() because we control the types and serialization cannot fail) and from_json() for deserialization (returning a Result because incoming JSON might be malformed).

This crate is the equivalent of the traits crate from Step 03. It defines the contract. Any service that wants to participate in the pipeline must understand these message types.

The app (orchestrator)

The app/Cargo.toml depends on common (the message definitions, similar to how it depended on traits in Step 03) plus serde and serde_json. No dependency on service1 or service2. The orchestrator does not know anything about the services’ internals. It only knows the message protocol.

The core abstraction is ServiceHandle, a struct that holds a Child process and the service name. Let’s walk through it.

ServiceHandle::new() spawns the service executable using Command::new(). Three pipe configurations matter here:

stdin(Stdio::piped())creates a writable pipe so the orchestrator can send messages to the servicestdout(Stdio::piped())creates a readable pipe so the orchestrator can receive responsesstderr(Stdio::inherit())lets the service’s diagnostic output appear directly in the terminal

That last one is important. Since stdout is reserved for JSON protocol messages, services use eprintln!() for all their diagnostic output (startup banners, progress logs, error messages). Because stderr is inherited, those messages show up in the same terminal as the orchestrator’s own output. This is why we see the indented [Service1] and [Service2] lines interleaved with the [App] lines in the expected output.

send_message() serializes a Message to JSON, writes it as a single line to the child’s stdin, and flushes. The flush is essential: without it, the message might sit in a buffer and the service would never see it.

read_response() wraps the child’s stdout in a BufReader, reads one line (blocking until data arrives), and returns the raw JSON string. The caller is responsible for trimming and parsing.

shutdown() sends a Message::Shutdown to the service and then calls wait() to reap the child process. Notice that shutdown() takes self by value, consuming the ServiceHandle. Once we shut down a service, the handle is gone. No accidental reuse.

In main(), the orchestrator locates the service executables, spawns them, queries their versions, runs the processing pipeline (send 42 to service1, get 84 back, send 84 to service2, get “Value-0084” back), and shuts everything down.

The error handling is intentionally lightweight. Most I/O operations use ? to propagate errors, and JSON parsing uses expect() to crash immediately on protocol violations. In a production system we would want retry logic, timeouts, and proper error variants. But that would triple the code size and obscure the architecture lesson, so we keep it simple.

One thing that is more verbose compared to calling functions in a library: there is real plumbing code here. We serialize, send, receive, deserialize, pattern-match on the response variant. What used to be component1.process(value) is now a whole send/receive/parse dance. That is the cost of process isolation.

The services

Let’s look at service1. Service2 follows the exact same pattern, just with TransformationService instead of ProcessingService.

The Cargo.toml depends on common (same message types) plus serde and serde_json.

In main.rs, the interesting part is that the business logic is tiny: ProcessingService is a zero-sized struct whose process() method doubles the input value and returns a ProcessResponse. That is it. Same logic as every previous step.

Everything else is plumbing. The main() function sets up a loop over stdin.lock().lines(). For each line, it tries to parse the JSON into a Message and dispatches with a match:

Message::Process(request)calls the business logic, wraps the result inMessage::ProcessResult, serializes it, and writes it to stdoutMessage::GetVersionbuilds aVersionResultusingenv!("CARGO_PKG_VERSION")(the version fromCargo.toml, baked in at compile time) and sends it backMessage::Shutdownbreaks out of the loop, ending the process- Any other variant logs a warning to stderr

- Parse errors are logged to stderr but the loop continues (resilient to garbage input)

Notice that services never use println!(). All diagnostic output goes through eprintln!() (to stderr), and all protocol responses go through writeln!(stdout, ...) followed by flush(). Mixing those up would corrupt the JSON protocol.

Both the orchestrator and the services run in a single-threaded, linear, synchronous context. No async, no threading. Simple and easy to reason about.

Right now, service1 and service2 are monolithic single-file executables. Of course, we could apply everything we learned in Step 02 and Step 03 to give each service an internal modular architecture with multiple files, separate crates, unit tests, and integration tests. But that would be premature for what we are trying to demonstrate here.

Expected output

Phase 06: Multi process

[App] Starting service: Service1

[App] Starting service: Service2

[Service1] Processing Service

[Service1] Listening on STDIN for JSON messages...

[Service1] Initialized - Ready to process requests

[App] service1 version: 0.1.0

[Service2] Transformation Service

[Service2] Listening on STDIN for JSON messages...

[Service2] Initialized - Ready to transform requests

[App] service2 version: 0.2.0

--- Processing Distributed Pipeline ---

[App] Input value: 42

[App] Sending to Service1...

[Service1] Input value: 42

[App] Service1 result: value=84, processed=true

[App] Sending to Service2...

[Service2] Input value: 84

[App] Service2 result: original=84, transformed=Value-0084

[App] Pipeline completed successfully!

[App] Final result: Value-0084

[App] Cleaning up services...

[App] Shutting down service: Service1

[Service1] Shutdown signal received

[Service1] Shutting down

[App] Shutting down service: Service2

[Service2] Shutdown signal received

[Service2] Shutting down

[App] All services shut down

[App] Execution complete

Tests are similar to what we have seen before. Each service has its own unit tests that exercise the business logic directly, without any pipe or message machinery involved.

cargo test -p service2

Finished `test` profile [unoptimized + debuginfo] target(s) in 0.05s

Running unittests src\main.rs

running 2 tests

test test::transform_formats_value ... ok

test test::transform_pads_small_value ... ok

test result: ok. 2 passed; 0 failed; 0 ignored; 0 measured; 0 filtered out; finished in 0.00s

Why This Step Matters

We just crossed a major threshold. We went from calling functions (whether in libraries, through trait objects, or across DLL boundaries) to exchanging messages between independent processes. This is a fundamentally different model.

The orchestrator and the services are fully decoupled. It does not matter if service1 needs a nuclear reactor to do its computation. From the orchestrator’s perspective, it sends a request and gets a response. The service’s internals are completely opaque.

There are a few things we intentionally left simple in this step.

Error handling is minimal. In a real system, we would want to handle cases like a service crashing mid-pipeline, malformed responses, timeouts when a service hangs, and so on. We could define error message variants, implement retry strategies, or use supervisor patterns. But all of that would make the code significantly more complex, and the point of this step is to show the architecture, not to build a production-grade framework.

Elasticity is within reach. If the orchestrator has a lot of work to do, it can spawn additional instances of service1 or service2. Each new instance is just another child process with its own pipes. We get basic elasticity on a single machine. True scalability (spreading services across multiple machines) is not possible yet because we rely on local pipes, but we are getting closer. That is exactly what Step 07 and Step 08 will address.

Try this (mentally): Imagine the orchestrator spawns ten instances of service1 instead of one. Each gets its own

ServiceHandlewith its own pair of pipes. At the OS level, this is trivial. The real bottleneck is in the orchestrator itself:read_response()blocks until one service replies. To send work to all ten in parallel and collect responses as they come, we would need either threads (one per handle) or async I/O (tokiowithtokio::process::Command). The services themselves would not change at all. This is a nice property of process isolation: scaling out is an orchestrator concern, not a service concern.

What Rust Gives Us Here

Rust does not do anything magical for multi-process communication. Pipes and JSON are OS and library features, not language features. But a few things in the Rust ecosystem make this step smoother than it would be in many other languages.

serdeandserde_json. The derive macros (#[derive(Serialize, Deserialize)]) turn our structs and enums into serializable types with zero boilerplate. TheMessageenum serializes to JSON using Serde’s default tagged representation:{"Process":{"value":42}}. Deserialization gives us back a proper Rust enum we canmatchon. No manual JSON parsing, no stringly-typed dispatchstd::process::Command. The standard library gives us a clean API for spawning child processes with piped stdin/stdout/stderr. No external crate needed. TheChildstruct owns the process handle and the pipe endpoints, and dropping it cleans up resources- Pattern matching on enums. The

matchblock in each service’s main loop is a perfect fit for message dispatch. The compiler ensures we handle every variant (or explicitly ignore them with a wildcard). If we add a new message type to theMessageenum, every service that does not handle it will get a compiler warning - Ownership for cleanup.

ServiceHandle::shutdown()takesselfby value. Once we call it, the handle is consumed and we cannot accidentally send messages to a dead service. The type system prevents use-after-shutdown bugs at compile time

Things to Think About

- Our orchestrator spawns one instance of each service. What if we need ten instances of

service1to handle load? What part of the orchestrator would need to change, and what part of the services would stay the same? - Right now, if

service2crashes mid-pipeline, the orchestrator gets a broken pipe error and stops. How would we design a supervision strategy? Should the orchestrator restart the service, retry the message, or bail out? - We use JSON as our message format. What would change if we switched to a binary format like MessagePack or Protocol Buffers? What would we gain, what would we lose?

- Our pipes only work on the same machine. If we need

service1on machine A andservice2on machine B, what is the minimum we need to change? (Hint: think about what the next two steps will introduce.) - How would we add monitoring? If someone asks “is service1 healthy right now?”, where does that question go and who answers it?

When to Move On

Pipes are great for parent/child communication on the same machine. But they have a fundamental limitation: both processes must run on the same host. The orchestrator spawns the services as children, and the pipes are local OS constructs.

If we are going to rewrite the communication layer anyway, we might as well make the services capable of running anywhere, locally or on a remote machine. That is exactly what Step 07 does with HTTP, and what Step 08 pushes further with a message broker (NATS). The business logic stays the same. Only the transport changes.

Summary

We split the application into three independent executables: an orchestrator (app) and two services (service1, service2). They communicate through JSON messages sent over stdin/stdout pipes. The common crate defines the message protocol (a Message enum with variants for each request/response type), replacing the trait definitions from Step 03. The business logic is unchanged (42 in, “Value-0084” out). We gained full process isolation, crash containment, and the foundation for multi-instance elasticity. The tradeoff is more plumbing code and the overhead of serialization compared to direct function calls.

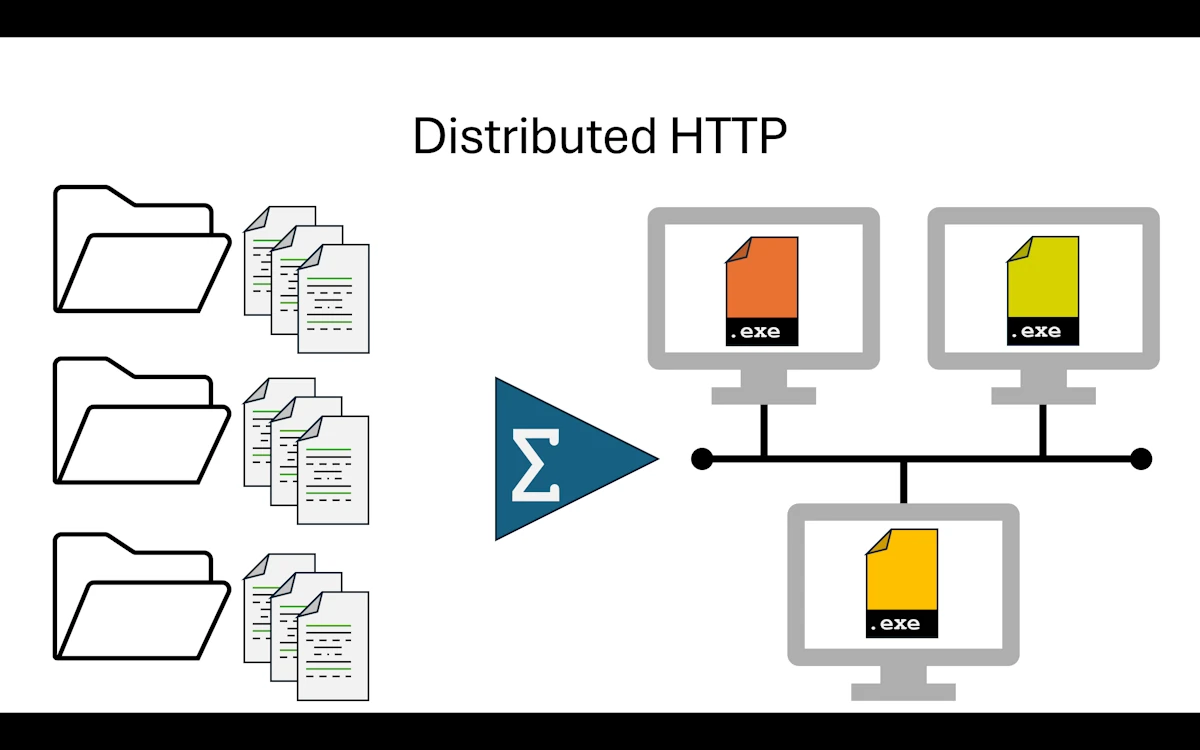

Step 07: Distributed HTTP

Distributed HTTP

What Changed from Step 06

This is another big shift. We still exchange messages between services, but they now travel over HTTP instead of stdin/stdout pipes. That single change unlocks something we could not do before: the services no longer need to run on the same machine as the orchestrator. They do not even need to be started by the orchestrator. Each service is an independent HTTP server that we launch in its own terminal, and the app crate simply sends HTTP requests to them.

The directory layout is identical to Step 06. Same four crates, same names.

step07_distributed_http/

│ Cargo.toml

├───app

│ │ Cargo.toml

│ └───src

│ main.rs

├───common

│ │ Cargo.toml

│ └───src

│ lib.rs

├───service1

│ │ Cargo.toml

│ └───src

│ main.rs

└───service2

│ Cargo.toml

└───src

main.rs

The root Cargo.toml is the same workspace definition. What changed is underneath.

The common crate got simpler. In Step 06, it defined a Message enum with seven variants covering every possible exchange. Here, we dropped the enum entirely. We only have four plain structs: ProcessRequest, ProcessResponse, TransformRequest, TransformResponse. Each carries a request_id field alongside the payload data. The routing is now handled by HTTP endpoints (/process, /transform), so we do not need an envelope enum to tell services which operation we want. The URL itself carries that intent.

The services switched from “read stdin in a loop” to “listen on a TCP port and handle HTTP routes.” They use Axum as the HTTP framework and tokio as the async runtime. Service1 listens on port 3001, Service2 on port 3002. Both expose a POST endpoint for their business logic and a GET /health endpoint.

The app crate no longer spawns child processes. It does not manage lifetimes, pipes, or shutdowns. It just creates an HTTP client with reqwest, sends POST requests, and reads the JSON responses. The code is noticeably shorter and simpler than the Step 06 orchestrator.

One new addition: every request now carries a request_id (a UUID). In the sequential version of the app, it might seem like overkill. But we will see in a moment why it matters.

Show Me the Code

Download the project and open step07_distributed_http or read the code on GitHub within your browser.

The common crate

The common/Cargo.toml depends only on serde. No more serde_json needed here since each service handles its own serialization. The lib.rs defines four structs, all deriving Serialize, Deserialize, Debug, and Clone:

#[derive(Debug, Serialize, Deserialize, Clone)]

pub struct ProcessRequest {

pub value: i32,

pub request_id: String,

}

#[derive(Debug, Serialize, Deserialize, Clone)]

pub struct ProcessResponse {

pub value: i32,

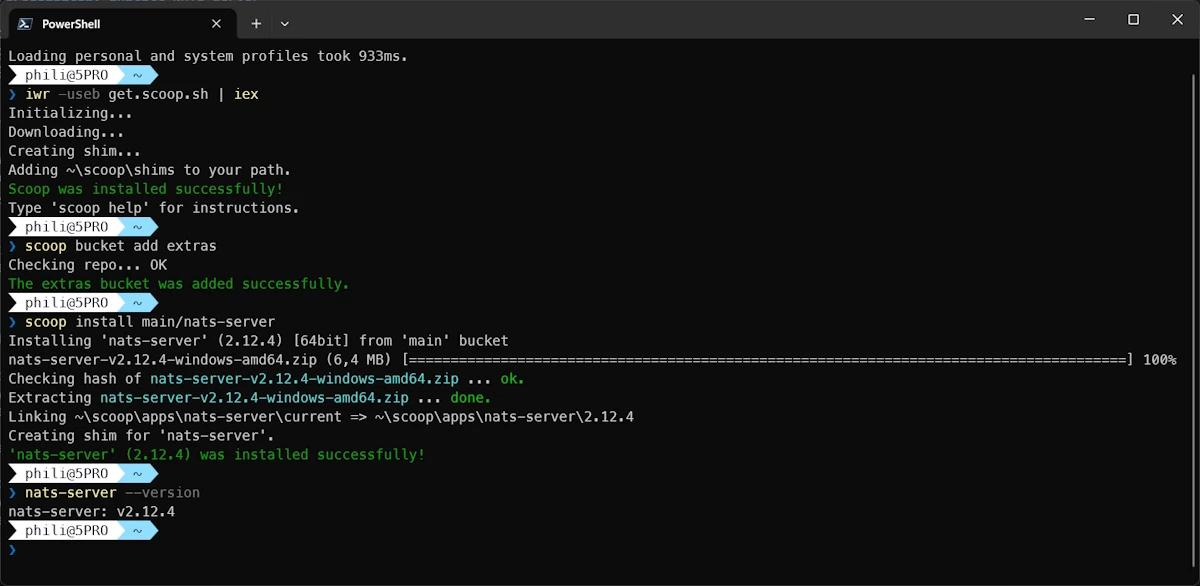

pub processed: bool,